请注意,本文编写于 239 天前,最后修改于 217 天前,其中某些信息可能已经过时。

目录

Resource Info Paper https://arxiv.org/abs/2409.12183 Code & Data https://github.com/Zayne-sprague/To-CoT-or-not-to-CoT Public ICLR Date 2025.06.10

Summary Overview

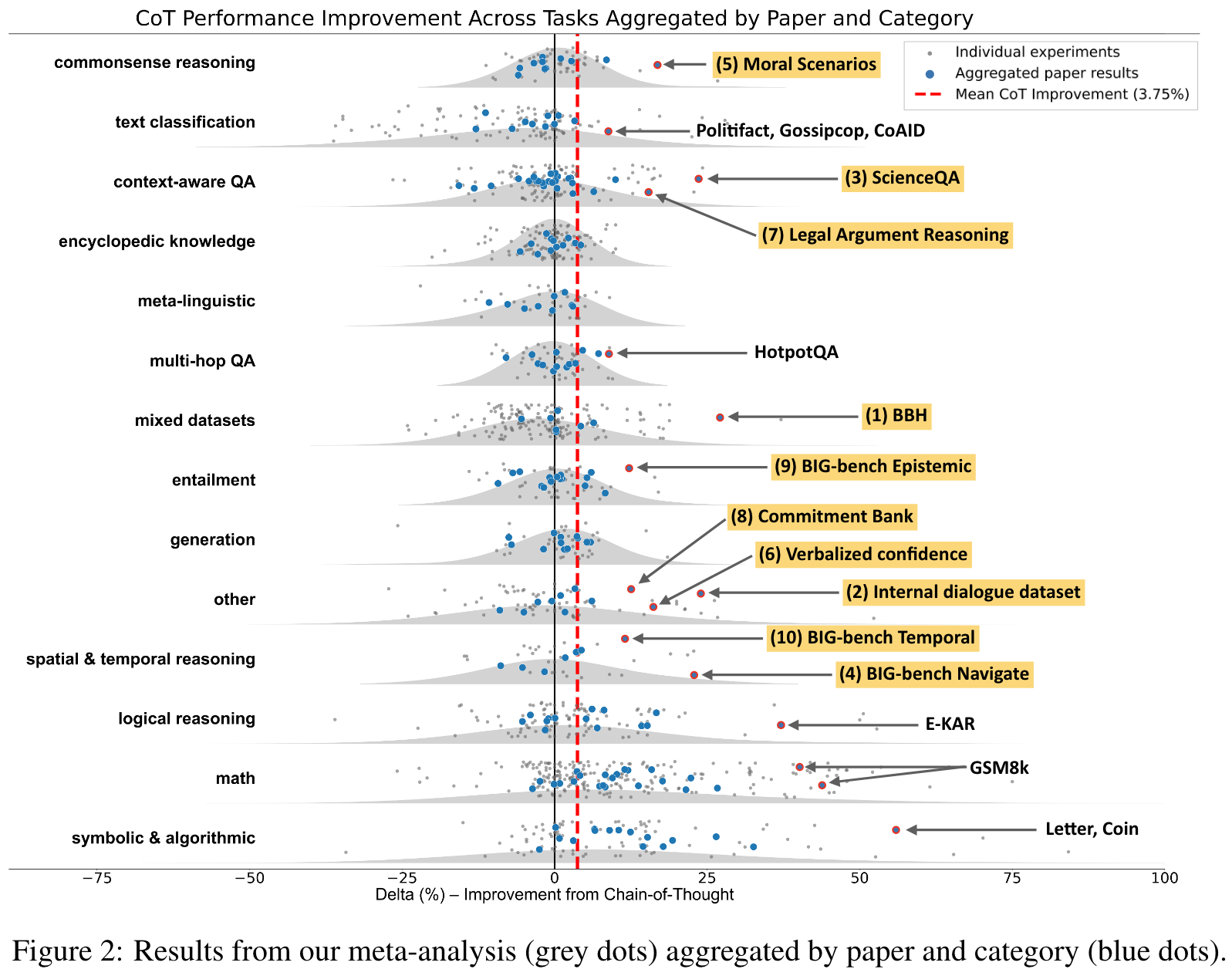

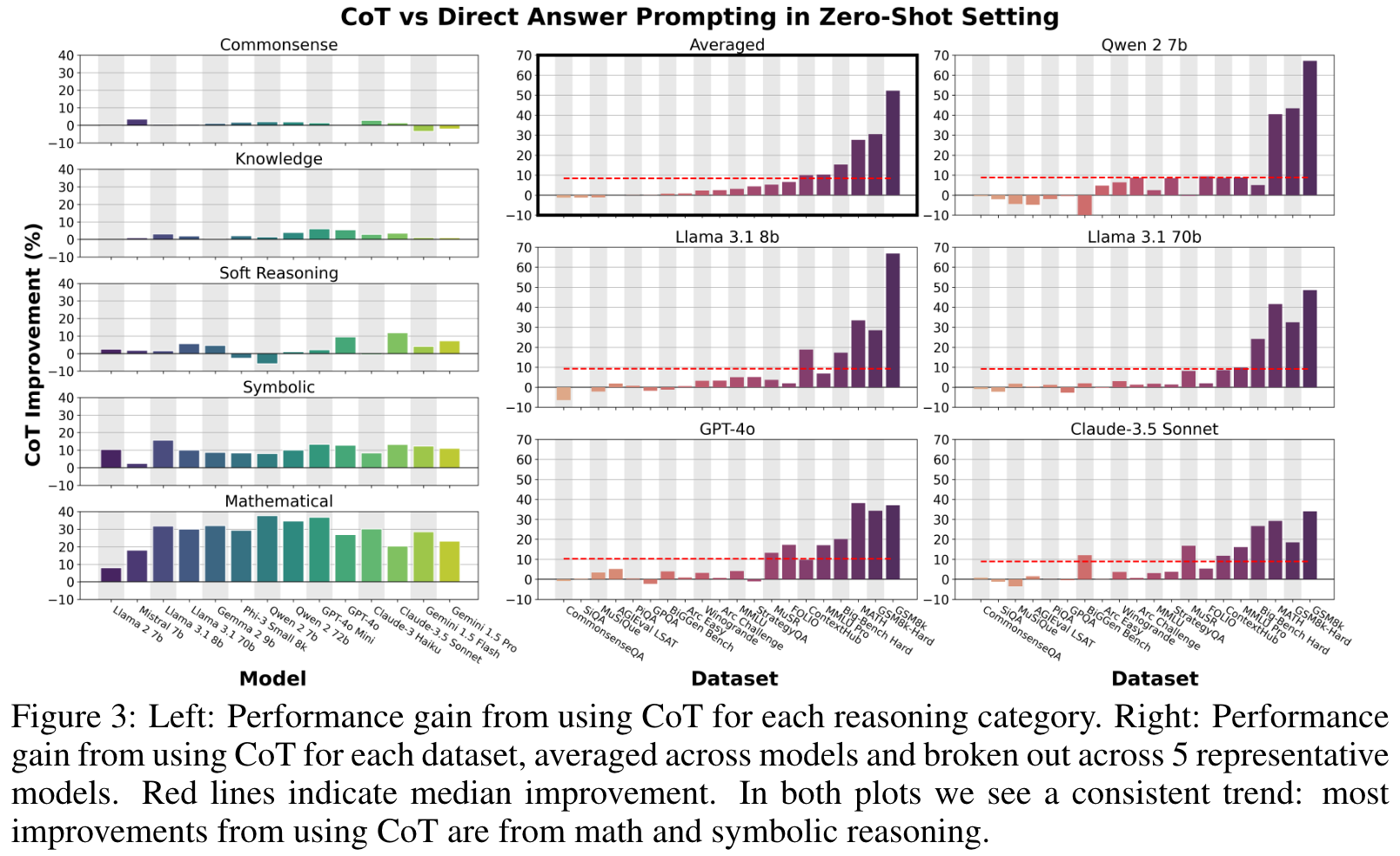

探讨了 CoT 是否会在各类任务上都提升模型性能,但是发现 CoT 仅在数学和逻辑相关的任务上提升大,其他类型的任务收益很小。

Main Content

Findings:

- CoT only helps substantially on problems requiring mathematical, logical, or algorithm reasoning.

- CoT primarily helps with the execution step that performs computation and symbolic manipulation, but falls short of what LLMs with tool augmentation can do.

Where does zero-shot CoT improve over direct prompts? On datasets that require math (MATH, GSM8K) or formal logic (ContextHub, MuSR to a lesser degree) to answer the problem.

Does the answer format impact where CoT will help? Not much. Free response capabilities required for BigGen Bench may not benefit from pre-planning.

Are the gains in Knowledge, Soft Reasoning, and Commonsense significant? Mostly no, except for MMLU, StrategyQA, and MuSR.

🤖

-

论文的创新之处与独特性:

- 系统性元分析:论文通过对超过100篇相关文献的元分析,系统地总结了链式思维(Chain-of-Thought, CoT)在不同任务中的表现。这种大规模的文献综述为理解CoT方法的适用范围提供了重要的参考。

- 定量实验覆盖广泛模型与数据集:论文对14个当代语言模型和20个数据集进行了实验,覆盖了从数学、逻辑到常识推理的多种任务类型,揭示了CoT方法在数学和符号推理任务上具有显著优势,而在其他任务上的增益有限。

- 任务类型与CoT效果的关联分析:通过对问题是否涉及符号操作(如包含“=”)的分类,论文明确指出CoT的主要优势集中于需要数学、逻辑或符号推理的任务。这种细粒度的分析为未来的研究提供了明确的方向。

- 规划与执行的分离分析:论文进一步分析了CoT在符号推理任务中的作用,发现其主要提升来自于执行阶段(即符号计算),而在规划阶段的表现不如外部工具。这一发现为将语言模型与工具结合的研究奠定了基础。

- 工具增强与CoT的对比:论文比较了使用CoT方法与工具增强方法(如Python解释器、逻辑求解器)的表现,发现工具增强在符号推理任务中表现更优,强调了未来需要探索更高效的推理范式。

-

论文中存在的问题及改进建议:

- 任务覆盖的局限性:尽管论文涵盖了多种任务类型,但对长时规划任务(如复杂多步推理)和生成类任务的分析较少。这些任务可能对CoT方法有不同的需求,未来应进一步扩展任务覆盖范围。

- 数据污染问题:论文提到了一些模型可能在训练过程中接触过评估数据集的问题,但未能提供明确的解决方案。建议在未来研究中采用全新或人工生成的数据集,以避免潜在的数据污染。

- 对非数学任务的改进探索不足:论文指出CoT在非数学任务上的增益较小,但未深入探讨如何改进这一问题。未来可以尝试设计针对非数学任务的专用CoT策略。

- 工具增强的复杂性:尽管工具增强方法表现优异,但其实现复杂度较高,且对模型生成的计划质量要求较高。建议未来研究探索如何提高模型生成计划的准确性,以降低工具增强的使用门槛。

- 用户成本与效率分析不足:论文提到CoT的计算成本较高,但缺乏对用户成本和效率的详细分析。未来可以通过实验量化不同方法的计算成本和性能权衡。

-

基于论文的内容和研究结果,提出的创新点或研究路径:

- 创新点1:基于任务特性的动态CoT策略

探索根据任务类型动态调整CoT方法的策略,特别是在非数学任务中设计新的推理范式。 - 创新点2:CoT与多模态信息的结合

研究如何将CoT方法扩展到多模态任务中,例如结合视觉、语音等信息的推理任务。 - 创新点3:优化工具增强方法的计划生成模块

针对工具增强方法,开发更鲁棒的计划生成模块,以提高其在符号推理任务中的适用性和效率。

- 创新点1:基于任务特性的动态CoT策略

-

为新的研究路径制定的研究方案:

-

研究路径1:基于任务特性的动态CoT策略

- 研究方法:

- 收集涵盖多种任务类型(如常识推理、文本分类、生成任务等)的数据集。

- 设计动态CoT策略,根据任务特性(如问题长度、推理步骤数)调整CoT提示。

- 在多个语言模型上测试动态CoT策略的效果,并与静态CoT和直接回答方法对比。

- 研究步骤:

- 对现有任务进行分类,提取特性标签(如是否涉及符号操作)。

- 开发动态提示生成算法,根据任务特性生成适配的CoT提示。

- 对比不同提示策略的性能,评估动态CoT的增益。

- 期望成果:

- 动态CoT策略在非数学任务中的显著性能提升。

- 提供一套任务特性与CoT策略匹配的通用规则。

- 研究方法:

-

研究路径2:CoT与多模态信息的结合

- 研究方法:

- 选取多模态任务数据集(如视觉-文本问答)。

- 将CoT方法扩展到多模态输入,设计多模态CoT提示。

- 评估多模态CoT在多模态任务上的性能,并与传统方法对比。

- 研究步骤:

- 构建多模态任务的CoT提示模板,结合图像或语音描述生成推理步骤。

- 使用多模态预训练模型(如CLIP)处理非文本输入,并与语言模型协同工作。

- 测试多模态CoT方法的性能,分析其对推理质量的影响。

- 期望成果:

- 多模态CoT方法在视觉-文本推理任务上的显著提升。

- 提供一套通用的多模态CoT设计框架。

- 研究方法:

-

研究路径3:优化工具增强方法的计划生成模块

- 研究方法:

- 针对符号推理任务,开发基于强化学习的计划生成模块。

- 使用自动化工具(如Python解释器、逻辑求解器)验证生成计划的准确性。

- 研究步骤:

- 设计强化学习算法,以生成可执行计划为目标。

- 在符号推理任务上训练和测试计划生成模块。

- 将优化后的计划生成模块与现有工具增强方法结合,评估其性能提升。

- 期望成果:

- 显著降低工具增强方法的计划生成错误率。

- 提供一种高效的符号推理解决方案,进一步缩小与工具增强方法的性能差距。

- 研究方法:

-

Others

本文作者:Geaming

本文链接:

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

目录